Blog

Running End-to-End Tests

By Camilo Calvo-AlcanizIntroduction

In an era of web development marked by large, complex single-page applications, a CI/CD pipeline that frequently builds, tests, and deploys your code is essential for robustness. However, when it comes to the testing step, this leaves developers with a challenge—with so many test frameworks and CI/CD services out there, how do you know which tools will work well together?

I want to walk through a method our organization used to incorporate end-to-end tests into a CI/CD pipeline—one that we have found effective, easy to set up, and simple to extend. It goes like this: we run our tests inside a Docker container, and then run the Docker container on an automation server. By running tests inside a container, we ensure that their environment is consistent and reproducible, and by running the container on an automation server, we ensure that they can be run at any time and incorporated into any process.

With this idea in mind, we deliberated on which test framework and automation server would best serve our needs. This led us to our two solutions:

- For the automation server, we chose Jenkins, an open-source solution with a rich plugin environment, large user base, and intuitive system for distributing builds and workflows.

- For the test framework, we chose CodeceptJS, a modern end to end testing framework with a special BDD-style syntax. The test suites are written as linear scenarios, mimicking a user's actions as they move through the web app being tested. We chose it over other frameworks because behavior-driven development suited our testing needs, and because of how simple it makes it to write and understand tests.

By combining these two tools, we created a workflow that can run end-to-end tests on the entire application with the click of a button. The resulting test step can be run as an ad-hoc sanity check, or can be incorporated into an existing CI/CD pipeline to ensure that code is verified before shipping out to production.

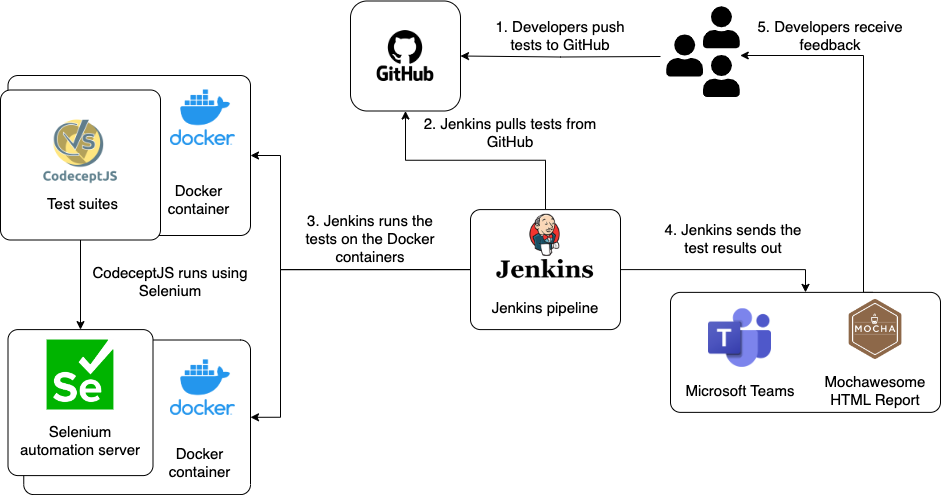

Figure 1. A high-level overview of the test automation process we implemented. Jenkins orchestrates the different components, while Docker ensures that tests run consistently. When test results are ready, they are sent back to the development team through multiple avenues.

In the next two sections, we’ll dive into the details for each step of this process—creating a Docker image capable of running CodeceptJS, and building a container from that image on Jenkins.

Exploring the Architecture Behind CodeceptJS

CodeceptJS’s most unique feature is its distinctive syntax. Tests are written from a user perspective—so for example, a line of code checking to see if you are on a certain page is written I.amOnPage (I am on page). As a result, the tests become highly readable, mirroring a real user’s journey through each feature of the application.

However, unlike self-contained testing frameworks such as Cypress, CodeceptJS is not able to run tests by itself. Instead it must invoke a browser automation tool, such as Selenium WebDriver, Puppeteer, or Playwright in order to manage and automate the browser. CodeceptJS will then translate the human-readable, BDD-style tests we write into API calls for these automation tools to be performed on the browser.

Because Selenium is the most widely-used framework for web browser automation, we chose to use Selenium WebDriver, Selenium’s programming interface, as our browser automation tool. Setting it up was easy thanks to the codecept.conf.js file, which allowed us to control the host, browser type, and port number, as well as some additional flags such as the window size and headless behavior.

Running CodeceptJS on Docker

Docker is a tool that enables you to manage and scale multiple applications in standardized, isolated, and reusable environments called containers. These containers are instances of images, and those images are runtime environments of Docker’s configuration file, the Dockerfile. You can download and run images directly from an image library like Docker Hub, or you can extend a base image in your own Dockerfile to install packages, set variables, and make other modifications to the image.

Because CodeceptJS requires a browser automation tool to function, we needed two containers running at the same time to perform our tests. One container ran Selenium Standalone Server, a proxy server which translates Selenium WebDriver calls into actions for the browser. Meanwhile, the other container ran our actual CodeceptJS tests.

The Selenium Standalone Server doesn’t require much customization, so we downloaded it—in its Chrome flavor—directly from DockerHub (https://hub.docker.com/r/openstax/selenium-chrome). The features we did need to customize, such as the port number and the allocated memory, we handled with flags during the build step.

On the other hand, the CodeceptJS image did require significant customization. This meant we had to extend the base image (https://hub.docker.com/r/codeceptjs/codeceptjs) with a Dockerfile in order to do things like create a directory for the output of the tests, set the environment as production or development, and install all the necessary packages.

With our two containers prepared, we then created a Jenkins pipeline to orchestrate them, run the tests, and serve the results back to our development team.

Running Docker on Jenkins

Just as Docker images are defined by Dockerfiles, Jenkins pipelines are defined by a Jenkinsfile. This Jenkinsfile, which is stored in the automation directory alongside the test suites, contains a series of steps to be delegated to worker machines, called Jenkins nodes. (These nodes can be physical computers, virtual machines, or even other Docker containers. Our organization uses a virtual Linux machine from AWS.)

In order to run the tests from end-to-end, the pipeline defined by our Jenkinsfile:

-

Runs the Selenium Standalone Server on a Docker container

-

Builds a custom Docker container for the CodeceptJS tests

-

Runs the CodeceptJS tests on the custom Docker container

-

Serves the test results as an HTML report and directly to Microsoft Teams

-

Turns off and remove all containers

First, the pipeline runs the Selenium Standalone Server container in a way that allows it to be accessed by the CodeceptJS container. Although the two are isolated environments, we can use the -p flag to give them access to each other through the host environment. This flag exposes a port for the container—in our case, mapping Selenium Standalone Server’s port to a port on the host (i.e. the Jenkins node). The server can then communicate with the CodeceptJS program as if they were operating in the same environment.

Next the pipeline saves the test results. Since these results are generated in a container, by default they will disappear at the end of the pipeline when the containers are removed. The solution is the -v flag, which directs Docker to mount certain directories to the host. Similar to the -p flag, it allowed us to map the test results folder in the CodeceptJS container to a matching folder on the Jenkins node.

With the results extracted, the next decision was how to present them. There are several options for generating test reports—as XML, directly on the CLI, or even as a SaaS service managed by CodeceptJS. However, we chose the simplest solution—a small reporter named mochawesome which generates an HTML page with all the tests, whether they passed or failed, and screenshots of the moment of failure, if necessary. This page was then made available to our development and quality assurance teams.

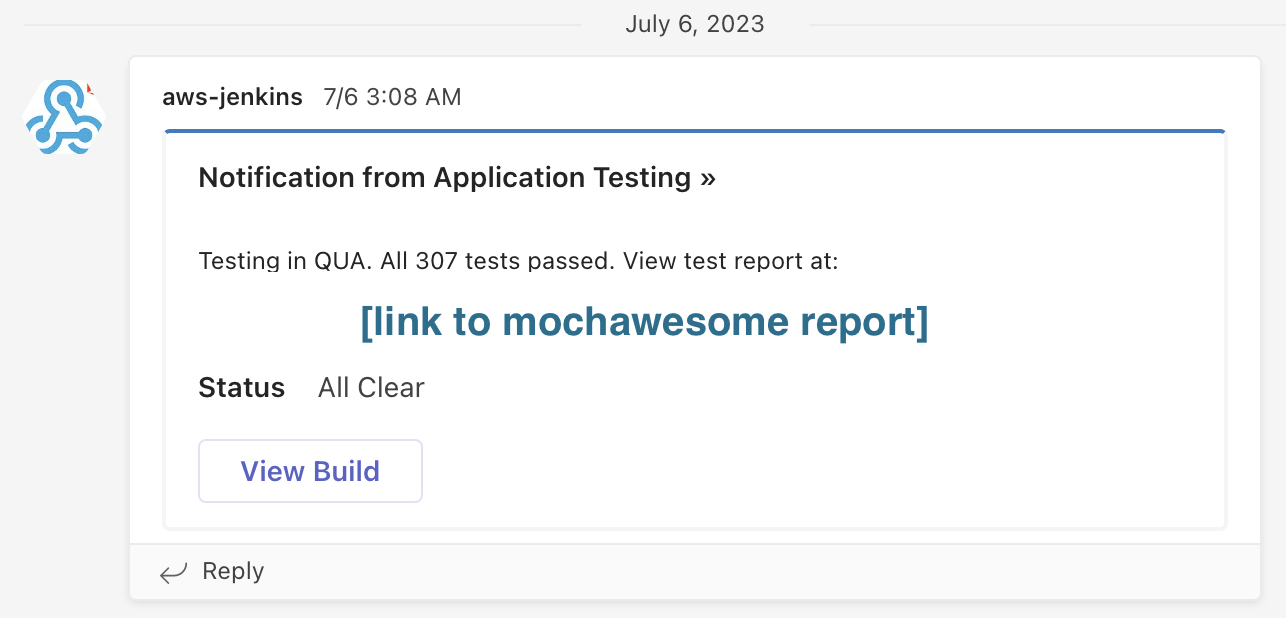

Finally, we wanted alerts to be sent out with a high-level summary of the test results. How many tests succeeded? How many failed? The pipeline pulls this summary from the mochawesome report with a simple regex command. Then, using the Office 365 Connector plugin, it sends the summary and a link to the report to Microsoft Teams. Here’s what that message looks like, with personal details obscured:

Figure 2. An example Teams alert sent from Jenkins.

With these steps in place, the pipeline is fully functional. Thanks to the reproducibility of Docker, we could be sure that every iteration of the tests would occur under identical conditions. And with Jenkins, we could run our new test step at any time, incorporating it into any process. We chose to start with a nightly health check, so that every morning we could review the application’s status and note any broken features—an added layer of safety and a major step forward for our development process.

At Lean Innovation Labs, we love to automate using DevOps methodologies. In doing so, we help our clients achieve greater efficiency and speed in their software development projects. If you want to take your software development to the next level, reach out to us at Lean Innovation Labs for expert guidance and support in implementing DevOps practices.